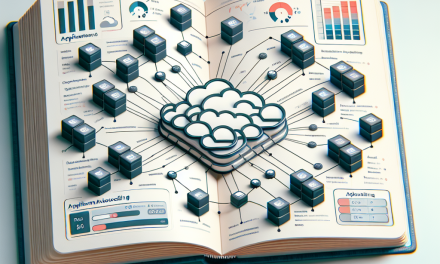

In today’s digital landscape, businesses are increasingly relying on cloud-native technologies to maintain a competitive edge. Kubernetes has emerged as the de facto standard for container orchestration, enabling organizations to manage, scale, and deploy applications seamlessly. However, as enterprises grow, so does the complexity of their infrastructure, particularly for geo-distributed clusters. This article explores best practices for scaling Kubernetes in a geo-distributed environment, focusing on maintaining availability, performance, and operational efficiency.

Understanding Geo-Distributed Clusters

A geo-distributed Kubernetes cluster consists of multiple Kubernetes nodes deployed in different geographical locations. This architecture offers several advantages, such as:

- Improved Latency: By deploying applications closer to users, organizations can significantly reduce latency.

- High Availability: Geo-distributed clusters enhance disaster recovery capabilities, ensuring that services remain available even during regional failures.

- Regulatory Compliance: Organizations can host data in specific jurisdictions to comply with local regulations.

While the benefits are substantial, managing and scaling geo-distributed clusters can also introduce challenges in terms of consistency, network performance, and operational complexity. Here are some best practices to consider when scaling your geo-distributed Kubernetes cluster.

1. Strategic Node Placement

When designing your geo-distributed cluster, it’s crucial to strategically place your nodes. Consider placing nodes in regions that have:

- High User Density: Choose locations based on where your end-users are primarily located.

- Cost Efficiency: Evaluate the pricing and performance aspects of different cloud providers and regions.

Using tools like Kubernetes’ node affinity and anti-affinity, you can optimize the placement of workloads to maximize performance and reduce costs.

2. Utilize Federation and Multi-Cluster Management

Kubernetes Federation allows you to manage multiple clusters across different regions as a single entity. This makes it easier to deploy applications in a consistent manner across clusters. Tools like kubefed can help you manage cross-cluster resources efficiently.

Additionally, adopting multi-cluster management solutions such as Rancher or OpenShift can provide a unified interface for managing geo-distributed clusters, simplifying the networking, security, and application deployment processes.

3. Leverage a Global Load Balancer

A global load balancer is crucial for distributing traffic evenly across your geo-distributed cluster. It can intelligently route requests to the nearest Kubernetes node, improving application response times and reducing latency. Solutions like Google Cloud Load Balancing or AWS Global Accelerator can help in setting up efficient global load balancing.

4. Monitor Network Latency and Performance

Network performance is a key consideration in a geo-distributed environment. Implementing robust monitoring tools like Prometheus, Grafana, or Datadog can help you gain insights into latency and throughput across your clusters. Regularly analyze this data to identify bottlenecks and optimize the performance of your applications.

5. Implement Data Consistency Strategies

Data consistency poses unique challenges in geo-distributed environments, especially when databases are replicated across regions. Use strategies like:

- Eventual Consistency: For applications where real-time data consistency is not critical, eventual consistency models can reduce latency.

- Geo-Partitioning: Store data closer to where it’s consumed, ensuring users access the data with lower latency.

Consider leveraging databases that natively support geo-replication, such as CockroachDB or Google Spanner, to manage data across regions effectively.

6. Automate Cluster Management

Automation is your best friend when scaling Kubernetes clusters. Use Kubernetes Operators or tools like ArgoCD and Flux to automate application deployment and managing lifecycle events. Leveraging CI/CD pipelines can also streamline deployments across multiple clusters and regions.

7. Establish Clear Security Protocols

Security becomes increasingly complex in geo-distributed environments. Implement role-based access control (RBAC), use network policies, and establish secure communication between clusters using a Virtual Private Network (VPN) or a service mesh like Istio or Linkerd.

Regularly audit your security settings to ensure compliance with best practices and mitigate risks.

8. Optimize Resource Allocation

Resource allocation is critical in geo-distributed clusters. Use Kubernetes Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA) to automatically adjust CPU and memory resources based on real-time demand. Ensure your resource quotas are established to prevent resource contention across clusters.

Conclusion

Scaling Kubernetes in a geo-distributed environment represents a unique opportunity for organizations to enhance the performance, availability, and resilience of their applications. By following these best practices—strategic node placement, federation, global load balancers, performance monitoring, data consistency strategies, automation, security, and resource optimization—you can effectively manage the complexities associated with geo-distributed clusters.

As Kubernetes continues to evolve, staying abreast of new features and best practices will ensure that your organization remains agile and responsive to user needs in an increasingly distributed world.