Introduction

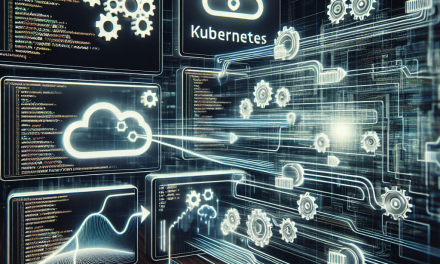

In the ever-evolving landscape of cloud-native applications, Kubernetes has emerged as a dominant platform for container orchestration. While its scalability and resilience are well-recognized, managing job priorities in Kubernetes remains a subtle art that can significantly affect application performance and resource utilization. In this article, we will explore effective strategies for managing job priorities in Kubernetes to ensure optimal resource allocation and improved application efficiency.

Understanding Kubernetes Jobs

Before diving into strategies, it’s crucial to understand what a Kubernetes Job is. A Kubernetes Job is a controller that ensures a specified number of pods successfully terminate. The Job resource is ideal for batch processing or one-time tasks that need guaranteed completion. However, in scenarios where multiple jobs run simultaneously, managing their execution order and resource access becomes essential.

Strategy #1: Prioritizing Resources through Resource Requests and Limits

Kubernetes allows you to define resource requests and limits for CPU and memory for each pod. This feature lets you prioritize jobs accordingly:

-

Resource Requests: Specify the minimum resources required for a pod to run. Pods with higher resource requests are likely to receive priority access to those resources.

-

Resource Limits: Define the maximum resources a pod can consume. This prevents any single job from monopolizing resources and impacting other jobs adversely.

By setting appropriate requests and limits, you can ensure that critical jobs get the resources they need to function effectively while avoiding contention issues.

Strategy #2: Using Priority Classes

Kubernetes introduced the concept of Priority Classes to help manage job priorities more explicitly. By assigning different priority levels to Pods, you can influence the scheduling decisions of the Kubernetes scheduler. Here’s how you can effectively utilize Priority Classes:

-

Define Priority Classes: Create different priority classes in your cluster depending on the business requirements. For example:

high-priority: For tasks that are crucial and must be executed immediately.normal-priority: For standard workloads.low-priority: For less significant jobs that can run at any time.

-

Assign Pods to Classes: Use the

priorityClassNamefield in the Pod specification to assign these classes. Keep in mind that higher priority values are given precedence over lower ones.

Implementing priority classes helps Kubernetes schedule the most important jobs first while ensuring that lower-priority jobs do not starve the higher-priority workloads.

Strategy #3: Job Backoff Limit and Active Deadline

Managing job retries and timeouts is vital for efficient job execution. Kubernetes allows you to set a backoffLimit and activeDeadlineSeconds.

-

Backoff Limit: This specifies how many times Kubernetes will attempt to retry a failed job before declaring it complete. A shorter limit can help free resources for higher-priority jobs quicker.

-

Active Deadline: This sets a time limit on how long a job can run. If the job exceeds this timeframe, it will be terminated, thereby freeing up resources for pending tasks.

These configurations balance resource utilization and job prioritization, ensuring critical tasks are not delayed by long-running or failed jobs.

Strategy #4: Horizontal Pod Autoscaling

For jobs that have variable loads or sudden spikes in demand, employing Horizontal Pod Autoscaling can be an effective strategy. Autoscaling allows you to adjust the number of pods in a deployment based on CPU utilization or other select metrics, which can be instrumental in managing job priorities across your cluster.

-

Define Metrics: Choose appropriate metrics (CPU, memory, or custom metrics) based on your job characteristics.

-

Set Scaling Policies: Determine how quickly your application should scale up or down based on incoming workload demands.

This dynamic approach ensures that more resources are available for important jobs during peak loads while scaling down when demand is lower, fostering an efficient resource management strategy.

Strategy #5: Utilizing Taints and Tolerations

Taints and tolerations in Kubernetes provide a mechanism for controlling pod placement and ensuring that only certain jobs can run on designated nodes.

-

Taints: Apply taints to nodes to repel Pods that do not tolerate those taints. This can be used to reserve specific nodes for high-priority jobs.

-

Tolerations: Add tolerations to pod specifications that match the node taints, allowing those critical jobs to get scheduled on nodes designated for high-priority workloads.

By implementing taints and tolerations, you can create an environment where high-priority tasks are allocated to dedicated resources, thereby optimizing job execution and reducing contention.

Conclusion

Effectively managing job priorities in Kubernetes is crucial for optimizing resource utilization and ensuring the smooth operation of cloud-native applications. By implementing strategies such as resource requests and limits, priority classes, job backoff limits, horizontal pod autoscaling, and taints with tolerations, you can manage workloads efficiently and meet the demands of your business.

As with any orchestration strategy, continuous monitoring and adjustment are essential to adapt to the needs of your applications. By harnessing the power of Kubernetes, you can ensure that critical tasks receive the attention they deserve, leading to a more robust and efficient deployment strategy.

For more insights on Kubernetes and cloud-native technologies, stay connected with WafaTech Blogs!