In the ever-evolving world of cloud-native applications, Kubernetes has emerged as the go-to orchestration platform. One of its most powerful features is the ability to automatically adjust the number of running pods based on demand. This capability, known as Pod Autoscaling, enhances application performance while optimizing resource usage. In this guide, we will explore the intricacies of Kubernetes Pod Autoscaling, offering insights to help you implement it efficiently.

What is Pod Autoscaling?

Pod Autoscaling in Kubernetes refers to the automatic adjustment of the number of pod replicas in a deployment based on observed metrics, typically CPU and memory usage. The Primary components involved are:

- Horizontal Pod Autoscaler (HPA): Automatically scales the number of pods in a deployment or replica set based on observed metrics.

- Cluster Autoscaler: Adjusts the number of nodes in a Kubernetes cluster based on the current usage, ensuring enough resources are available.

Why Use Pod Autoscaling?

- Resource Efficiency: Autoscaling allows Kubernetes to optimize resource usage effectively. By scaling down during low demand, you can save costs, particularly in cloud environments.

- Performance: Autoscailing ensures that your applications can handle increases in load without performance degradation.

- Resilience: Autoscaling contributes to application reliability by ensuring that services remain available under various load conditions.

How Does Pod Autoscaling Work?

1. Metrics Server

Before Kubernetes can scale your application, it needs to gather metrics. The Metrics Server is a cluster add-on that collects resource usage data and exposes it through the Kubernetes API. Ensure that the Metrics Server is installed and properly configured in your cluster.

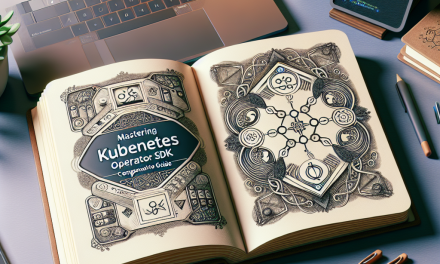

2. Configuring HPA

To enable autoscaling, you need to define an HPA resource. An example YAML configuration file might look like this:

yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: example-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: example-deployment

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

Explanation:

- scaleTargetRef: Defines the deployment or replica set that the HPA targets.

- minReplicas: Minimum number of pod replicas to run.

- maxReplicas: Maximum number of pod replicas to handle load peaks.

- metrics: Specifies the criteria for scaling. In this case, pods will be scaled based on CPU utilization.

3. Autoscaling Process

Once configured, HPA continuously monitors the defined metrics. If resource usage exceeds the defined thresholds, HPA increases the number of pod replicas; conversely, it decreases them when resource usage falls below the thresholds.

Advanced Configuration Options

Custom Metrics

In addition to using CPU and memory, you can scale based on custom metrics. This requires installing the Custom Metrics API. You can use tools like Prometheus with custom metric exporters to feed data into Kubernetes.

Multiple Metrics

HPA can also be configured to use multiple metrics for scaling decisions, such as a combination of CPU and memory usage, or application-specific metrics.

Queue Length Autoscaling

For applications using message queues (like Kafka or RabbitMQ), you can scale based on the length of the queue, ensuring that applications can process requests efficiently as demand fluctuates.

Best Practices for Pod Autoscaling

- Set Initially Conservative Limits: Start with conservative limits for min and max replicas to observe your application’s behavior under load before fine-tuning.

- Monitor Closely: Employ tools like Prometheus and Grafana to visualize metrics and understand application performance over time.

- Implement Resource Requests and Limits: Always define resource requests and limits for your pods. This sets expectations for resource allocation and supports efficient autoscaling.

- Test Load Scenarios: Perform load testing to understand how your applications behave under stress and adjust your HPA settings accordingly.

- Review Regularly: Application demands and behaviors change. Regularly review your autoscaling policies to ensure they align with business needs.

Conclusion

Kubernetes Pod Autoscaling is a crucial feature for modern cloud-native applications, enabling efficient resource utilization and seamless user experiences. By understanding its components, configuration, and best practices, you can leverage autoscaling to enhance the performance and reliability of your applications at scale.

For WafaTech readers seeking to deepen their understanding of Kubernetes and improve their infrastructure, diving into Pod Autoscaling is an essential step. With the right configuration and monitoring, your applications can thrive in a dynamic cloud environment, adapting to ever-changing demands effortlessly. Happy scaling!