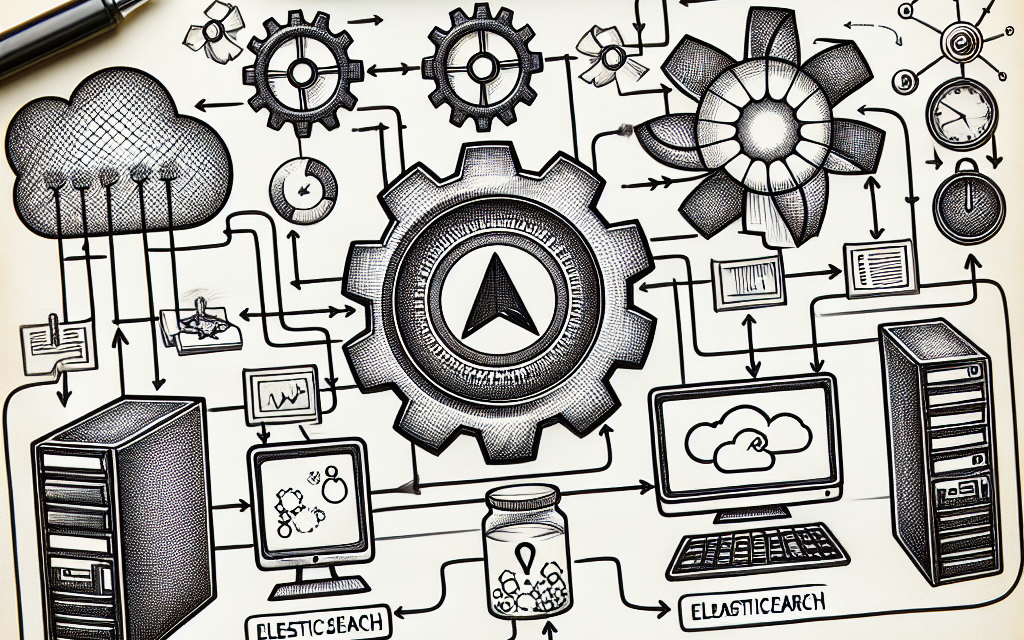

In the fast-paced world of software development and operations, effective log management is paramount. As organizations increasingly adopt container orchestration platforms like Kubernetes, the complexity of managing logs can lead to significant challenges. However, by integrating Elasticsearch with Kubernetes, businesses can vastly improve their log management, ensuring better performance, easier troubleshooting, and enhanced visibility into their applications.

Understanding the Importance of Log Management

Logs are vital for diagnosing application issues, monitoring performance, and ensuring compliance. In a microservices architecture, logs become even more critical as they are generated from multiple sources. Without a robust log management solution, developers can struggle to sift through a deluge of information, losing precious time troubleshooting problems that could have been solved quickly.

Challenges in Kubernetes Log Management

- Volume and Variety: Kubernetes clusters can generate overwhelming amounts of log data across many containers.

- Dynamic Environments: The ephemeral nature of containers means that logs are often short-lived.

- Distributed Systems Complexity: Traditional log management tools may not handle interconnected microservices well, leading to fragmented or incomplete data.

Why Choose Elasticsearch?

Elasticsearch, part of the Elastic Stack, is a powerful search and analytics engine that excels at handling large volumes of data. Here’s why it’s an ideal choice for Kubernetes log management:

- Real-Time Analytics: Elasticsearch enables quick indexing and retrieval of log data, allowing teams to gain insights in real-time.

- Scalability: It can easily scale horizontally, accommodating the dynamic nature of Kubernetes.

- Powerful Search Capabilities: Elasticsearch’s full-text search capabilities make it easy to filter and analyze logs based on specific criteria.

Setting Up Elasticsearch with Kubernetes

To optimize log management with Elasticsearch in a Kubernetes environment, follow these steps:

1. Deploy Elasticsearch on Kubernetes

Using Helm, the Kubernetes package manager, simplifies the process of deploying Elasticsearch. With a single command, you can deploy Elasticsearch with pre-configured settings that are suitable for production environments.

bash

helm repo add elastic https://helm.elastic.co

helm install elasticsearch elastic/elasticsearch

2. Implement Fluentd or Logstash for Log Forwarding

To gather logs from Kubernetes pods and send them to Elasticsearch, you can use Fluentd or Logstash. Fluentd is lightweight and integrates seamlessly with Kubernetes.

- Deploy Fluentd: Create a DaemonSet to ensure that a Fluentd instance runs on each node in your cluster, collecting logs from all containers.

yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: logging

spec:

…

template:

…

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:latest

env:- name: FLUENT_ELASTICSEARCH_HOST

value: “elasticsearch.logging.svc.cluster.local”

volumeMounts: - name: varlog

mountPath: /var/log

- name: FLUENT_ELASTICSEARCH_HOST

3. Configure Elasticsearch Output

In Fluentd’s configuration, specify the Elasticsearch output so that logs are sent to your Elasticsearch instance efficiently.

xml

<match **>

@type elasticsearch

@logstash_format true

host elasticsearch.logging.svc.cluster.local

port 9200

4. Use Kibana for Visualization

Once Elasticsearch receives logs, use Kibana to analyze and visualize the data. Kibana provides powerful dashboards and search capabilities, making it easy to navigate through logs, identify trends, and highlight anomalies.

5. Implement Retention Policies

With Elasticsearch, you can set up index lifecycle management (ILM) policies to manage logs’ retention. This ensures older logs are deleted automatically, keeping your storage costs manageable while retaining relevant historical data for compliance or auditing purposes.

Best Practices for Optimizing Log Management

- Structured Logging: Utilize structured logging to ensure logs are easily parseable and searchable. Include metadata such as timestamp, log level, service name, and request ID.

- Centralized Logging: Always centralize logs from all services and nodes to enhance visibility and make troubleshooting easier.

- Monitor Resource Usage: Regularly monitor the resource usage of your Elasticsearch cluster to ensure optimal performance and capacity planning.

- Use Kibana Alerts: Set up notifications in Kibana to alert your team of anomalies or specific error patterns in your logs.

Conclusion

Optimizing Kubernetes log management can significantly enhance your team’s ability to troubleshoot issues, monitor application performance, and ensure compliance. By leveraging Elasticsearch in tandem with Kubernetes, organizations can create a robust logging infrastructure that not only handles the volume of log data generated but also provides actionable insights. Embrace the power of Elasticsearch to transform your logging strategy and enjoy a more streamlined, efficient operational workflow.

For more insights and updates on Kubernetes and log management, stay tuned to WafaTech Blogs!