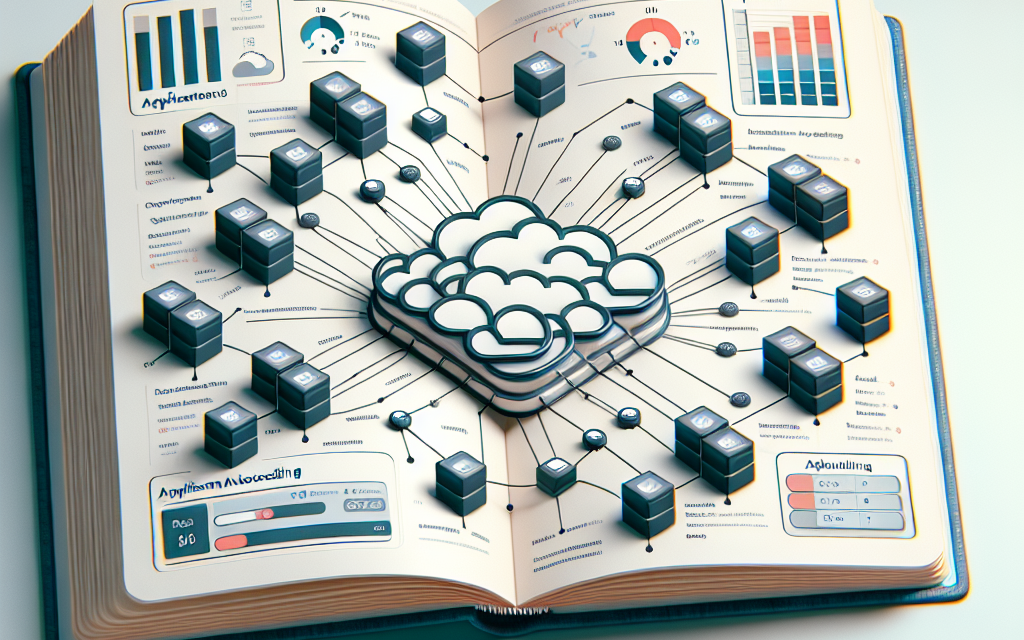

Kubernetes has revolutionized the way we deploy, manage, and scale applications in the cloud-native era. Among its myriad features, application autoscaling stands out as a cornerstone capability that enables organizations to optimize resource usage and improve application performance dynamically. In this comprehensive guide, we will delve into Kubernetes application autoscaling, exploring its mechanisms, benefits, and implementation strategies.

What is Kubernetes Application Autoscaling?

Kubernetes application autoscaling refers to the automatic adjustment of the number of running instances (pods) of a given application based on demand. This process helps ensure that applications are responsive under varying loads without user intervention. By integrating autoscaling, organizations can not only efficiently allocate resources but also reduce costs by minimizing idle resource usage.

Key Autoscaling Components

-

Kubernetes Horizontal Pod Autoscaler (HPA):

The HPA scales the number of pods in a deployment or replica set based on resource utilization metrics, such as CPU or memory. You can specify thresholds using thecpuormemorymetrics, and HPA will maintain the number of pods to keep these metrics within the specified range. -

Vertical Pod Autoscaler (VPA):

Unlike HPA, which scales based on the number of instances, the VPA adjusts the resource allocations (CPU and memory limits) for existing pods. This ensures that applications are allocated the necessary resources based on observed usage. - Cluster Autoscaler:

The Cluster Autoscaler is slightly different; it adjusts the number of nodes in the Kubernetes cluster itself. It adds nodes when pods cannot be scheduled due to resource constraints and removes nodes when they become underutilized.

Benefits of Application Autoscaling

-

Cost Efficiency: By dynamically scaling resources based on real-time demand, organizations can optimize costs and avoid over-provisioning.

-

Improved Performance: Autoscaling ensures that applications remain responsive even during traffic spikes, enhancing user experience.

-

Resource Optimization: With HPA and VPA working in tandem, organizations can fine-tune resource allocation, ensuring applications use only what they need.

- Operational Agility: Autoscaling enables rapid response to changes in workload without manual intervention, allowing DevOps teams to focus on more critical tasks.

How to Implement Autoscaling in Kubernetes

Step 1: Enable Metrics Server

Before implementing HPA or VPA, you need to have a metrics server running in your cluster to collect resource usage data. You can deploy it by applying the official metrics server manifests:

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlStep 2: Create a Deployment

Create a Deployment that defines your application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 1

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app

image: my-app-image:latest

resources:

requests:

cpu: "200m"

memory: "512Mi"

limits:

cpu: "1"

memory: "1Gi"Step 3: Create a Horizontal Pod Autoscaler

Now, create an HPA resource to scale your deployment based on CPU usage.

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50This configuration sets the CPU utilization target at 50%, with a minimum of one pod and a maximum of ten.

Step 4: (Optional) Create a Vertical Pod Autoscaler

If you want to use VPA, you can create a VPA resource as well.

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: my-app-vpa

spec:

targetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

updatePolicy:

updateMode: "Auto"This will automatically adjust the resource requests and limits based on observed usage.

Step 5: Monitor Autoscaling

After deploying your application and the autoscaler configurations, monitor their performance with Kubernetes commands:

kubectl get hpa

kubectl describe hpa my-app-hpaYou can also use tools like Grafana and Prometheus for more sophisticated monitoring and visualization.

Conclusion

Kubernetes application autoscaling is an essential feature that empowers organizations to manage application workloads with efficiency and ease. By leveraging HPA, VPA, and Cluster Autoscaler, businesses can achieve optimal resource allocation, cost savings, and enhanced performance. As enterprises increasingly rely on cloud-native technologies, mastering autoscaling in Kubernetes will become a strategic advantage.

In summary, embracing Kubernetes application autoscaling not only streamlines operations but ultimately fosters a more responsive and resilient architecture—a foundation for future innovation in the cloud-native landscape. Follow WafaTech Blogs for more in-depth articles and updates on Kubernetes and other cutting-edge technologies.